Flume——开发案例

主要内容:

- 介绍几种常见的Flume使用场景下,如何配置相关的配置文件

监控端口数据发送到控制台

source:netcat

channel:memory

sink:logger

# Name the components on this agent

a1.sources = r1

a1.sinks = k1

a1.channels = c1

# Describe/configure the source

a1.sources.r1.type = netcat

a1.sources.r1.bind = localhost

a1.sources.r1.port = 44444

# Describe/configure the channel

a1.channels.c1.type = memory

a1.channels.c1.capacity = 1000

a1.channels.c1.transactionCapacity = 100

# Describe/configure the sink

a1.sinks.k1.type = logger

# Bind the source and sink to the channel

a1.sources.r1.channels = c1

a1.sinks.k1.channel = c1

实时读取本地文件到HDFS

方案一:(存在重复数据的风险)

source:exec

channel:file

sink:hdfs

# Name the components on this agent

a1.sources = r1

a1.sinks = k1

a1.channels = c1

# Describe/configure the source

a1.sources.r1.type = exec

a1.sources.r1.command = tail -F /opt/module/hive/logs/hive.log

a1.sources.r1.shell = /bin/bash -c

# Describe/configure the channel

a1.channels.c1.type = file

a1.channels.c1.checkpointDir = /mnt/flume/checkpoint

a1.channels.c1.dataDirs = /mnt/flume/data

# Describe/configure the sink

a1.sinks.k1.type = hdfs

a1.sinks.k1.hdfs.path = hdfs://ip:host/flume/%Y%m%d/%H

#上传文件的前缀

a1.sinks.k1.hdfs.filePrefix = logs-

#是否按照时间滚动文件夹

a1.sinks.k1.hdfs.round = true

#多少时间单位创建一个新的文件夹

a1.sinks.k1.hdfs.roundValue = 1

#重新定义时间单位

a1.sinks.k1.hdfs.roundUnit = hour

#是否使用本地时间戳

a1.sinks.k1.hdfs.useLocalTimeStamp = true

#积攒多少个 Event 才 flush 到 HDFS 一次

a1.sinks.k1.hdfs.batchSize = 1000

#设置文件类型,可支持压缩

a1.sinks.k1.hdfs.fileType = DataStream

#多久生成一个新的文件

a1.sinks.k1.hdfs.rollInterval = 600

#设置每个文件的滚动大小

a1.sinks.k1.hdfs.rollSize = 134217700

#文件的滚动与 Event 数量无关

a1.sinks.k1.hdfs.rollCount = 0

#最小冗余数

a1.sinks.k1.hdfs.minBlockReplicas = 1

# Bind the source and sink to the channel

a1.sources.r1.channels = c1

a1.sinks.k1.channel = c1

方案二:(安全,不丢数据)

source:TAILDIR

channel:file

sink:hdfs

# Name the components on this agent

a1.sources = r1

a1.channels = c1

a1.sinks = k1

# Describe/configure the source

a1.sources.r1.type = TAILDIR

a1.sources.r1.positionFile = /var/log/flume/taildir_position.json

a1.sources.r1.filegroups = f1 f2

a1.sources.r1.filegroups.f1 = /var/log/test1/example.log

a1.sources.r1.filegroups.f2 = /var/log/test2/.*log.*

a1.sources.r1.fileHeader = true

a1.sources.ri.maxBatchCount = 1000

# Describe/configure the channel

a1.channels.c1.type = file

a1.channels.c1.checkpointDir = /mnt/flume/checkpoint

a1.channels.c1.dataDirs = /mnt/flume/data

# Describe/configure the sink

a1.sinks.k1.type = hdfs

a1.sinks.k1.hdfs.path = /flume/events/%y-%m-%d/%H%M/%S

a1.sinks.k1.hdfs.filePrefix = FlumeData-

a1.sinks.k1.hdfs.round = true

a1.sinks.k1.hdfs.roundValue = 10

a1.sinks.k1.hdfs.roundUnit = minute

# Bind the source and sink to the channel

a1.sources.r1.channels = c1

a1.sinks.k1.channel = c1

实时读取目录下文件到HDFS

source:spooldir

channel:file

sink:hdfs

# Name the components on this agent

a1.sources = r1

a1.sinks = k1

a1.channels = c1

# Describe/configure the source

a1.sources.r1.type = spooldir

a1.sources.r1.spoolDir = /opt/module/flume/upload

a1.sources.r1.fileSuffix = .COMPLETED

a1.sources.r1.fileHeader = true

# Describe/configure the channel

a1.channels.c1.type = file

a1.channels.c1.checkpointDir = /mnt/flume/checkpoint

a1.channels.c1.dataDirs = /mnt/flume/data

# Describe/configure the sink

a1.sinks.k1.type = hdfs

a1.sinks.k1.hdfs.path = hdfs://ip:host/flume/upload/%Y%m%d/%H

#上传文件的前缀

a1.sinks.k1.hdfs.filePrefix = upload-

#是否按照时间滚动文件夹

a1.sinks.k1.hdfs.round = true

#多少时间单位创建一个新的文件夹

a1.sinks.k1.hdfs.roundValue = 1

#重新定义时间单位

a1.sinks.k1.hdfs.roundUnit = hour

#是否使用本地时间戳

a1.sinks.k1.hdfs.useLocalTimeStamp = true

#积攒多少个 Event 才 flush 到 HDFS 一次

a1.sinks.k1.hdfs.batchSize = 100

#设置文件类型,可支持压缩

a1.sinks.k1.hdfs.fileType = DataStream

#多久生成一个新的文件

a1.sinks.k1.hdfs.rollInterval = 600

#设置每个文件的滚动大小大概是 128M

a1.sinks.k1.hdfs.rollSize = 134217700

#文件的滚动与 Event 数量无关

a1.sinks.k1.hdfs.rollCount = 0

#最小冗余数

a1.sinks.k1.hdfs.minBlockReplicas = 1

# Bind the source and sink to the channel

a1.sources.r1.channels = c1

a1.sinks.k1.channel = c1

实时读取本地文件到Kafka(重点)

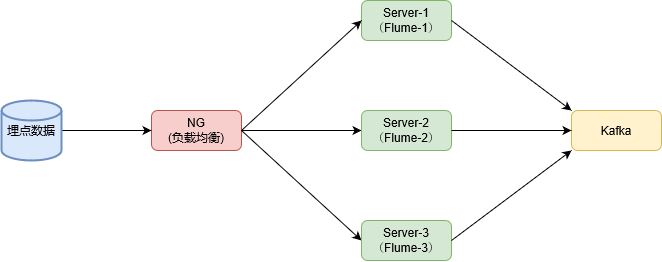

场景:所有埋点数据统一发送到NG服务器,经过负载均衡后,均匀发送到3台服务器(数量自行配置),再由每台服务器上Flume将数据采集到Kafka。整体架构如图:

source:TAILDIR

channel:file

sink:kafka

# Name the components on this agent

a1.sources = r1

a1.channels = c1

a1.sinks = k1

# Describe/configure the source

a1.sources.r1.type = TAILDIR

a1.sources.r1.positionFile = /var/log/flume/taildir_position.json

a1.sources.r1.filegroups = f1 f2

a1.sources.r1.filegroups.f1 = /var/log/test1/example.log

a1.sources.r1.headers.f1.topic = topic_a

a1.sources.r1.filegroups.f2 = /var/log/test2/.*log.*

a1.sources.r1.headers.f1.topic = topic_b

a1.sources.r1.fileHeader = false

a1.sources.r1.maxBatchCount = 1000

# Describe/configure the channel

a1.channels.c1.type = file

a1.channels.c1.checkpointDir = /mnt/flume/checkpoint

a1.channels.c1.dataDirs = /mnt/flume/data

# Describe/configure the sink

a1.sinks.k1.type = org.apache.flume.sink.kafka.KafkaSink

a1.sinks.k1.kafka.bootstrap.servers = list[ip:host]

a1.sinks.k1.kafka.flumeBatchSize = 100

a1.sinks.k1.kafka.producer.acks = 1

a1.sinks.k1.kafka.producer.compression.type = snappy

# Bind the source and sink to the channel

a1.sources.r1.channels = c1

a1.sinks.k1.channel = c1

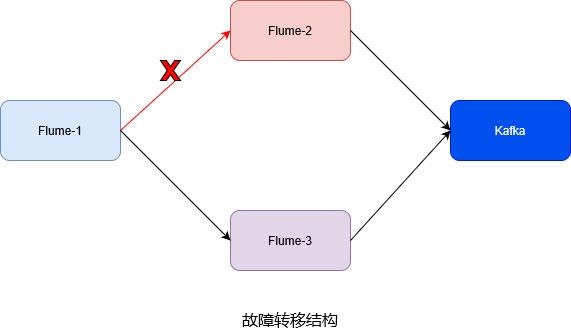

单数据源多出口(Sink组)——故障转移

Flume-1为单source,单channel,多Sink,即Sink组。在Flume-2或者Flume-3发生故障时,可实现故障转移。其中,Flume-1的配置可参考:

# Name the components on this agent

a1.sources = r1

a1.channels = c1

a1.sinks = k1 k2

a1.sinkgroups = g1

# Describe/configure the source

a1.sources.r1.type = TAILDIR

a1.sources.r1.positionFile = /var/log/flume/taildir_position.json

a1.sources.r1.filegroups = f1 f2

a1.sources.r1.filegroups.f1 = /var/log/test1/example.log

a1.sources.r1.filegroups.f2 = /var/log/test2/.*log.*

a1.sources.r1.fileHeader = true

a1.sources.ri.maxBatchCount = 1000

# Describe/configure the channel

a1.channels.c1.type = file

a1.channels.c1.checkpointDir = /mnt/flume/checkpoint

a1.channels.c1.dataDirs = /mnt/flume/data

# Describe/configure the sink

a1.sinks.k1.type = avro

a1.sinks.k1.hostname = hadoop1

a1.sinks.k1.port = 4141

a1.sinks.k2.type = avro

a1.sinks.k2.hostname = hadoop1

a1.sinks.k2.port = 4142

# Describe/configure the sink groups

a1.sinkgroups.g1.sinks = k1 k2

# "failover"即故障转移策略

a1.sinkgroups.g1.processor.type = failover

# 为k1、k2分配权重(优先发k2)

a1.sinkgroups.g1.processor.priority.k1 = 5

a1.sinkgroups.g1.processor.priority.k2 = 10

a1.sinkgroups.g1.processor.maxpenalty = 10000

# Bind the source and sink to the channel

a1.sources.r1.channels = c1

a1.sinks.k1.channel = c1

aq.sinkw.k2.channel = c1

单数据源多出口(Sink组)——负载均衡

Flume-1的配置可参考:(只需要修改上述代码中的“sink group”部分)

# Describe/configure the sink groups

a1.sinkgroups.g1.sinks = k1 k2

# "failover"即故障转移策略

a1.sinkgroups.g1.processor.type = load_balance

a1.sinkgroups.g1.processor.backoff = true

# 发送策略:round_robin, random

a1.sinkgroups.g1.processor.selector = random